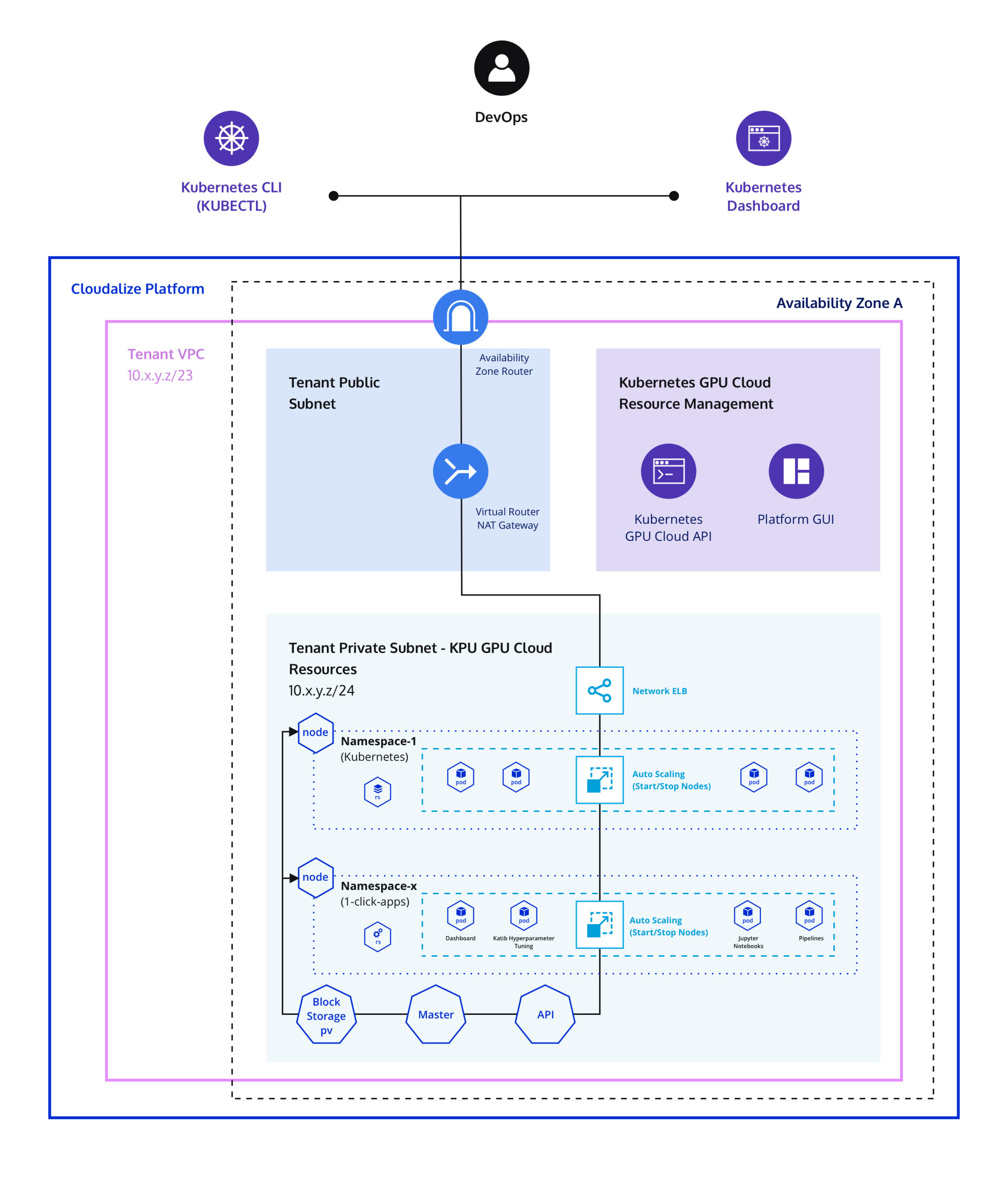

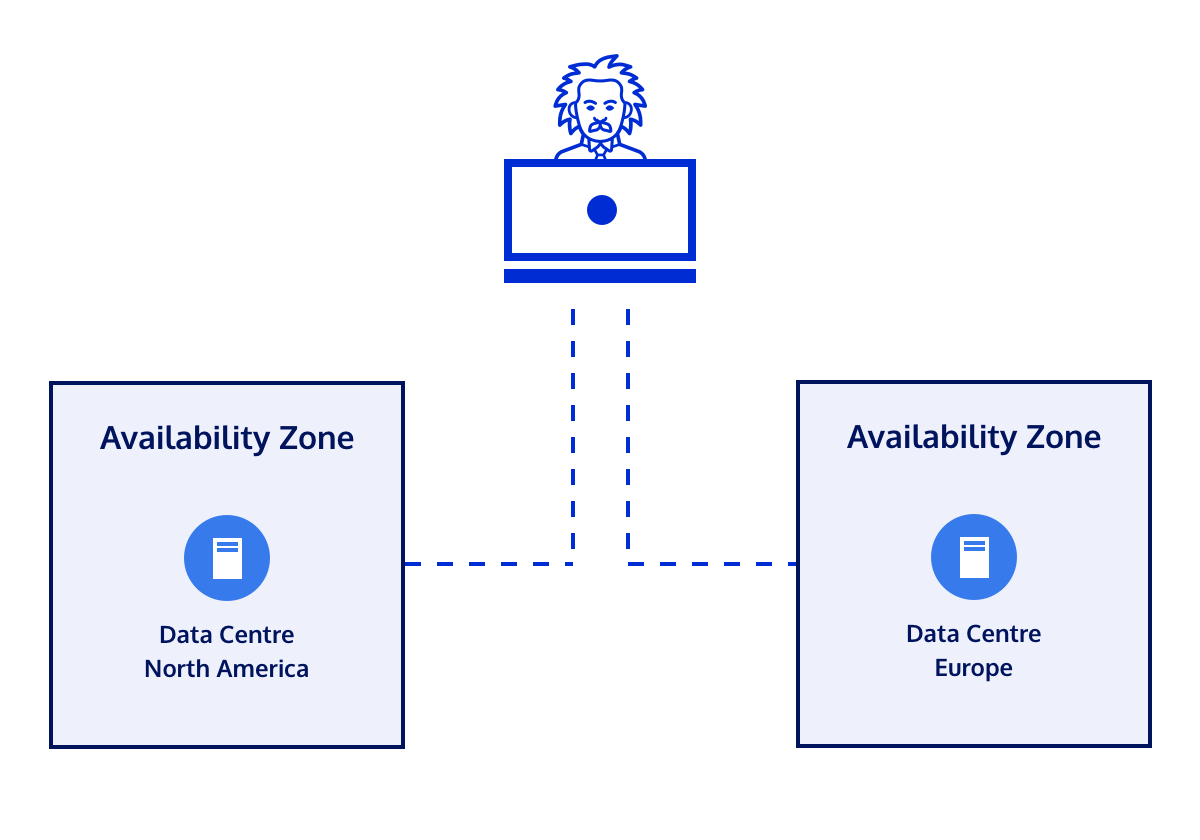

Availability Zone

The platform runs across different Availability Zones where resources are hosted specifically on each zone and where the entry point is defined. We currently have two zones, located in New Jersey (US) and London (UK), where GPU resources can be accessed.

Tenant Virtual Private Container (VPC)

The Tenant Virtual Private Container (VPC) is a network segment for each user or for each project to securely isolate resources. Within the Tenant VPC, there is a Tenant Private Subnet, where the computing resources or Kubernetes are running and divided by Kubernetes Namespace. Kubernetes Namespace is a general component or a virtual cluster where users can run basically everything.

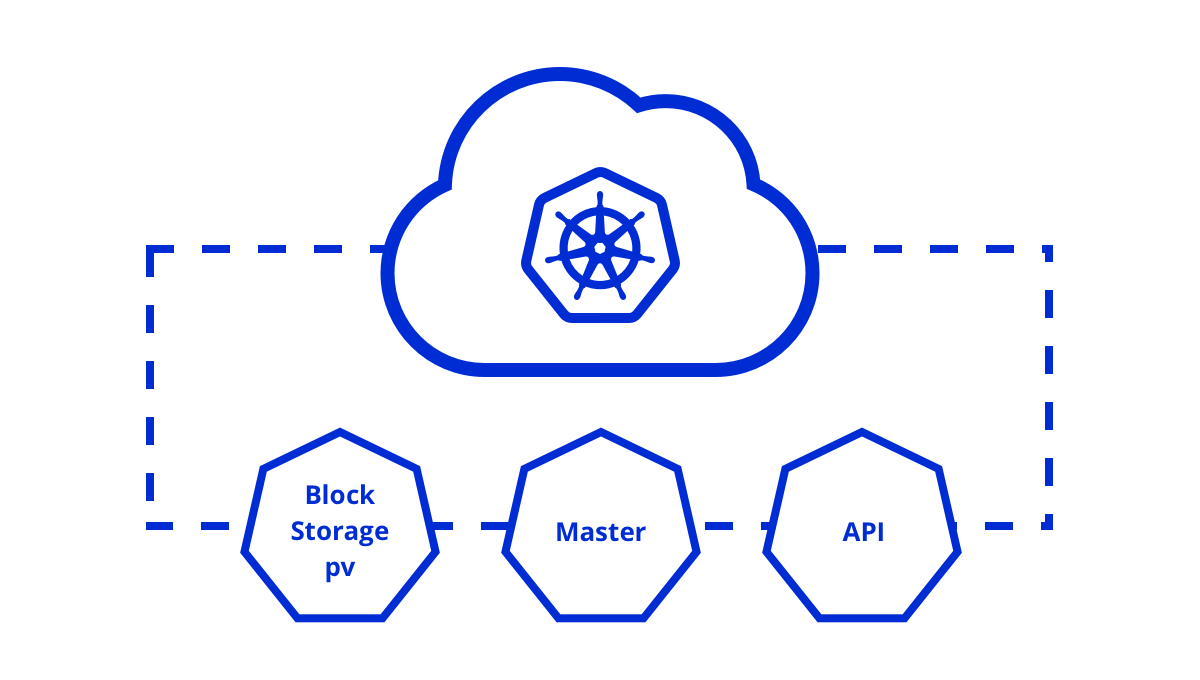

Kubernetes Components

With the One-click applications, users can have several Namespaces or Namespace-x to run different applications such as Katib Hyperparameter Tuning, Jupyter Notebooks, and Pipelines within the same cluster. All these Namespaces are running within the same standard Kubernetes API, the Master, and the Block Storage. The Master serves as a Control Pane of Kubernetes that can be run on either a virtual machine or on a high-availability mode for continuous access or management of resources. Block Storage or Persistent Volume (PV) is an important component as it prevents data loss due to scaling up, downtime or power cut.

Platform Connection

DevOps or users can connect to the platform from two options: Kubernetes CLI (KubeCTL) and Kubernetes Dashboard. Kubernetes GPU Cloud API and the Platform GUI serve as the entry point where users can create a new Kubernetes environment or store an existing one using either one of two options. Kubernetes GPU Cloud API can also be used for resource management and reporting purposes.

This is a service running entirely via the Internet. To connect the public network (Public IP address) defined as the Availability Zone Router to the Tenant VPC and the Tenant Private Subnet, the network translation called Net Gateway creates an internal connection through the Virtual Router, by converting the public network into Cloudalize’s private network.

Functionalities

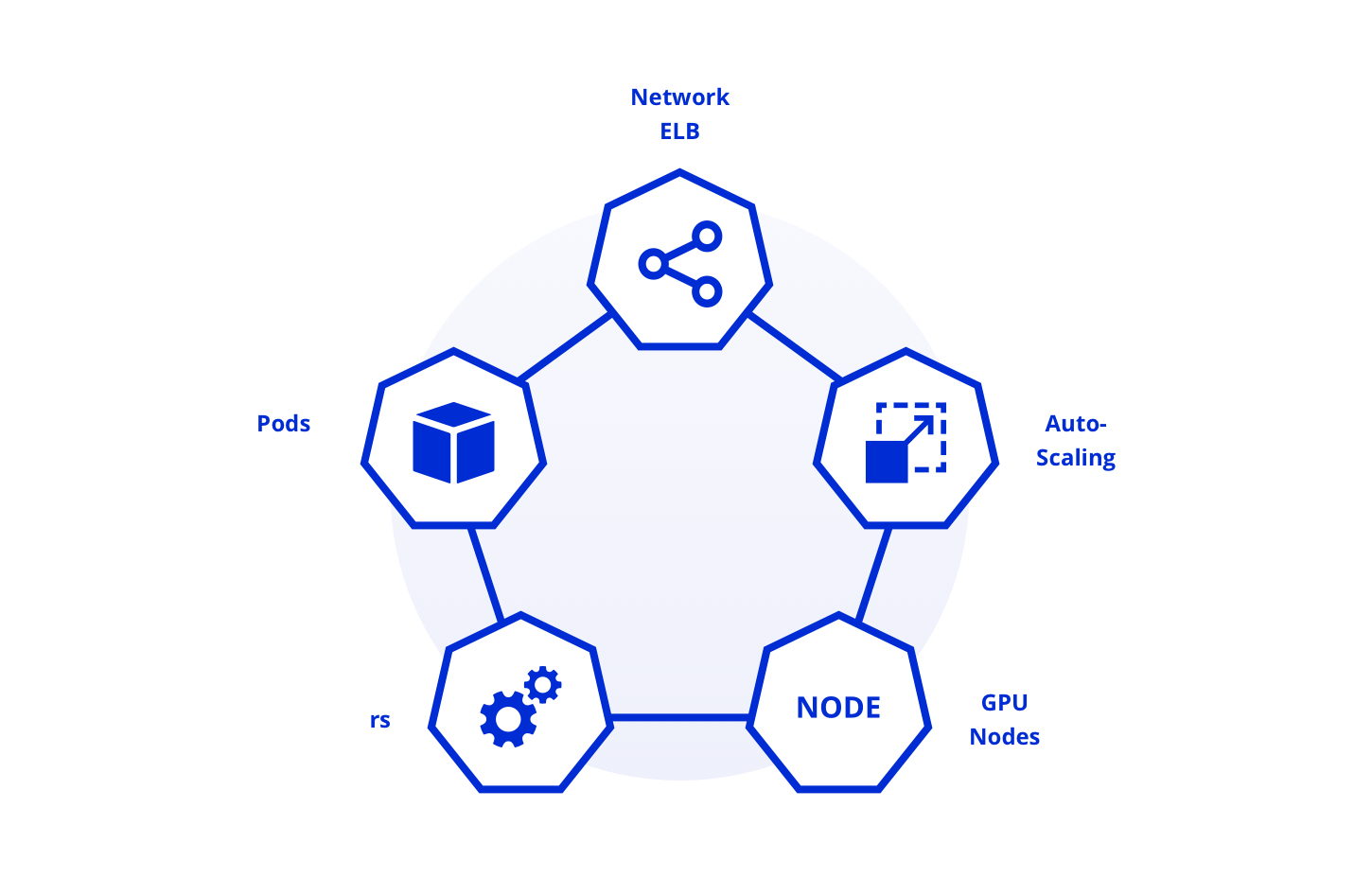

The Network Elastic Load Balancing (ELB) is a tool used to enable several Pods within a Namespace to be highly available to the outside world. The auto-scaling functionality is used for scaling up and down of resources. It allows users to automatically start and stop Nodes, which are GPU-powered virtual machines. Finally, the Replica Set (RS) is included for high availability of data or to guarantee the availability of a specified number of identical Pods. For instance, with the Replica Set, users can run a web interface on one Pod and distribute it across Nodes within a Namespace.